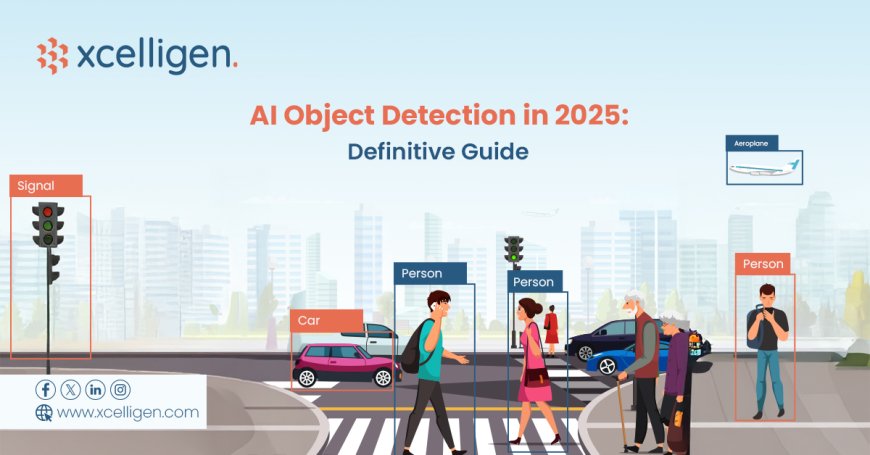

AI Object Detection in 2025: Definitive Guide

Explore 2025's most advanced object detection models, their real-world impact, and why top agencies partner with AI leaders like Xcelligen.

In 2025, object detection is expanding from a computer vision subfield to a critical infrastructure component for AI-driven defence, healthcare, and advanced manufacturing. The field has matured from Haar cascades to convolutional networks, but 2025 is a new beginning. McKinsey reports that 84% of AI-powered enterprises use scaled object detection in at least one critical system.

The real change is object detection from narrow classifiers to generalized perception engines with multimodal reasoning, zero-shot learning, and federated deployment across edge, cloud, and secure environments. Next-generation object detection models process drone, scan, and IoT data using self-supervised learning and zero-shot generalization.

This guide covers next-gen object detection architectures, use cases, and enterprise strategies. It shows how leaders like Xcelligen provide AI-driven defense, healthcare, and industry solutions.

What is object detection?

Object detection is a complex computer vision AI technique that uses Convolutional Neural Networks (CNNs) and deep learning models (like YOLO and Faster R-CNN) to find, locate, and categorize many objects in an image or video by making bounding boxes that are very accurate in both space and meaning in real time.

Top Object Detection Applications in 2025 Across Key Industries

-

Autonomous Systems: UAVs and autonomous vehicles need object detection for spatial awareness and hazard avoidance. LiDAR and vision sensor systems in 2025 use real-time multimodal detection models trained on edge-deployable backbones like MobileViT and YOLO-NAS.

-

Healthcare and Diagnostics: AI Object detection enables automated tumor detection, surgical navigation, and anomaly triage in radiology. Xcelligen-supported healthcare platforms now incorporate vision transformers into DICOM analysis pipelines, achieving +18% diagnostic precision over baseline CNN models.

-

Surveillance and National Security: AI vision is now core to defense intelligence. Detection models embedded in drones and border systems can identify unauthorized personnel, weapons, and vehicles, while conforming to NIST SP 800-53 and CMMC security standards.

-

Retail and Smart Infrastructure: In smart cities, object detection powers traffic analytics, automated checkout, and shelf auditing. With real-time latency under 20ms, edge-deployed AI vision is now feasible for even small-format retail environments.

Quick Comparison of Leading Object Detection Models in 2025

By 2025, object detection will be led by ViTs, diffusion models, and attention-based architectures. Models like YOLOv9, DETR 2.0, RT-DETR, and SAM now set the benchmark.

|

Model |

Backbone |

Latency (ms) |

mAP@0.5:0.95 |

Edge Deployable |

Key Feature |

|

YOLOv9 |

Hybrid Conv-ViT |

9ms |

55.2 |

Yes |

NAS-optimized & fast for edge |

|

DETR 2.0 |

ViT+Deformable |

21ms |

56.7 |

Partially |

End-to-end object set prediction |

|

RT-DETR |

ResNet101 + ViT |

13ms |

54.8 |

Yes |

Real-time global attention pipeline |

|

SAM (Meta AI) |

ViT-Huge |

22ms |

N/A (Seg) |

No |

Zero-shot segmentation |

Real-World Applications of AI Object Detection in 2025

AI object detection has moved beyond theoretical models into high-performance real-world deployments across security-sensitive and regulation-heavy sectors. Below are three illustrative implementations demonstrating how modern detection frameworks are used in production.

-

Defense UAV Detection: A national defense agency used RT-DETR with TensorRT on Jetson modules, achieving 92 %+ accuracy and <35?ms latency under NIST SP 800-53 compliance.

-

Medical Diagnostics: RetinaNet was adapted with domain tuning and edge optimization, boosting mammography accuracy by 18% with explainable overlays and DICOM integration.

-

Industrial Inspection: A leading AI solutions partner like Xcelligen developed a compact YOLOv9 system for real-time defect detection, cutting mis-picks by 42% with sub-50?ms inference on ARM edge devices.

Beyond Bounding Boxes: The Rise of Unified Perception Models

Object detection in 2025 has expanded into multi-modal and multi-task learning. Instead of training separate models for segmentation, classification, and detection, unified frameworks like Pix2Seq v2, GroundingDINO, and OWL-ViT tackle all simultaneously.

These models accept image+text inputs and support:

-

Zero-shot grounding: Detect "a vehicle carrying hazardous material" without specific class training.

-

Cross-modal prompts: Segment only objects mentioned in natural language (e.g., "cracked solar panels on the left wing").

-

Stream fusion: Real-time ingestion of video, LIDAR, and thermal data.

Flexible, cross-domain intelligence is replacing simple unified perception models. This allows real-time, context-aware detection in edge and regulated environments. HuggingFace Transformers and NVIDIA's Triton inference server power next-generation surveillance, disaster response, and remote diagnostics systems.

Challenges in 2025 Object Detection Pipelines

Even in 2025, object detection pipelines face persistent operational challenges. Labeling latency, inference drift under real-world conditions, and fragmented deployment targets continue to slow production scaling. Despite architectural advances, practitioners face operational challenges:

-

Data labelling bottlenecks: Even with SAM and fine-tuning, domain-specific bounding box annotation slows deployment time.

-

Inference drift: Real-world data (e.g., weather, camera angle shifts) causes live performance drops that are not caught during benchmarking.

-

Deployment fragmentation: One model rarely fits all, from Jetson Nano to AWS Inferentia and on-premise clusters.

That's why AI service leaders like Xcelligen integrate continual learning pipelines, model-specific fallback strategies, and cross-platform optimization tools (ONNX, TensorRT, TVM) into their full-stack deployments, providing models not only to perform but sustain performance in production.

Observability and Explainability in Production

Gartner estimates that 75% of AI failures by 2026 will stem from model opacity, where decisions can't be traced, understood, or justified. Explainability isn't optional for mission-critical object detection systemsit's foundational. Modern production pipelines now integrate:

-

SHAP or Grad-CAM bounding box overlays show feature effects on detections.

-

Grafana displayed inference-level telemetry, prediction confidence, frame processing time, failure rates, and IoU.

-

Drift detection mechanisms automatically retrain or rollback when accuracy drops or distribution shifts exceed thresholds (e.g., >5% weekly confidence dip).

-

Low-confidence detections (<0.6) are flagged, annotated, and fed back into the model for continuous learning in human-in-the-loop feedback loops.

All of Xcelligen's AI implementations start with observability-first techniques, which provide great model performance and fully explicable, trackable, and followable systems, where these systems are ideal for mission-critical environments.

Why Forward-Looking Enterprises Choose Xcelligen

Xcelligen specializes in engineering mission-grade object detection systems that move seamlessly from proof-of-concept to operational scale. Each deployment is meticulously architected for:

-

Security & Compliance Fully aligned with ISO 27001, CMMC, NIST SP 800-53, and FedRAMP mandates.

-

Low-Latency Performance Optimized for edge inference below 20?ms using ONNX, TensorRT, and quantization strategies.

-

Built-in Explainability SHAP overlays and confidence thresholds are rendered directly within end-user UIs.

-

Full-Stack Observability End-to-end telemetry including drift diagnostics, IoU tracking, and automated retraining triggers.

In one defense engagement, Xcelligen enabled real-time detection under constrained bandwidth conditions, improving system reliability by 41% while maintaining auditable compliance at every stage.

The Future of Detection Starts with Xcelligen

By 2025, real-time vision systems for autonomy, diagnostics, infrastructure, and defence will need to be able to detect objects. Useful pipelines are accurate, strong, easy to understand, and flexible.

Xcelligen, a reliable AI/ML engineering partner, makes your detection stack work better at scale, strengthens security, and plans for continuous, accountable deployment.

Ready to build mission-critical AI vision systems? Schedule your demo with Xcelligen today.